December 8, 2025

Bits and Bots: Analyzing HTTP 404 errors

Every quarter, our State of the Bots report offers a snapshot of how AI bots are interacting with the open web. In our latest report, the Q2 2025 State of the Bots report, one data point that particularly caught our attention was how often AI answers contained dead or hallucinated links. These show up as HTTP 404 errors when users click those links from a chatbot and land on a page that doesn’t exist.

At first glance, it might sound like a technical detail. But behind those numbers is a bigger story: AI systems are increasingly the gateways to information, yet their accuracy in driving users to real content is wildly inconsistent and could reflect the impact of defenses being put into place. It also reveals the importance of continuous access to web data, and what happens when this content gets cached and goes stale.

What’s an HTTP 404?

Every time someone, or something, visits your website, your server replies with a short code that says how it went.

- 200 means “the page loaded successfully”

- 403 means “access forbidden/blocked”

- 404 means “this page does not exist”

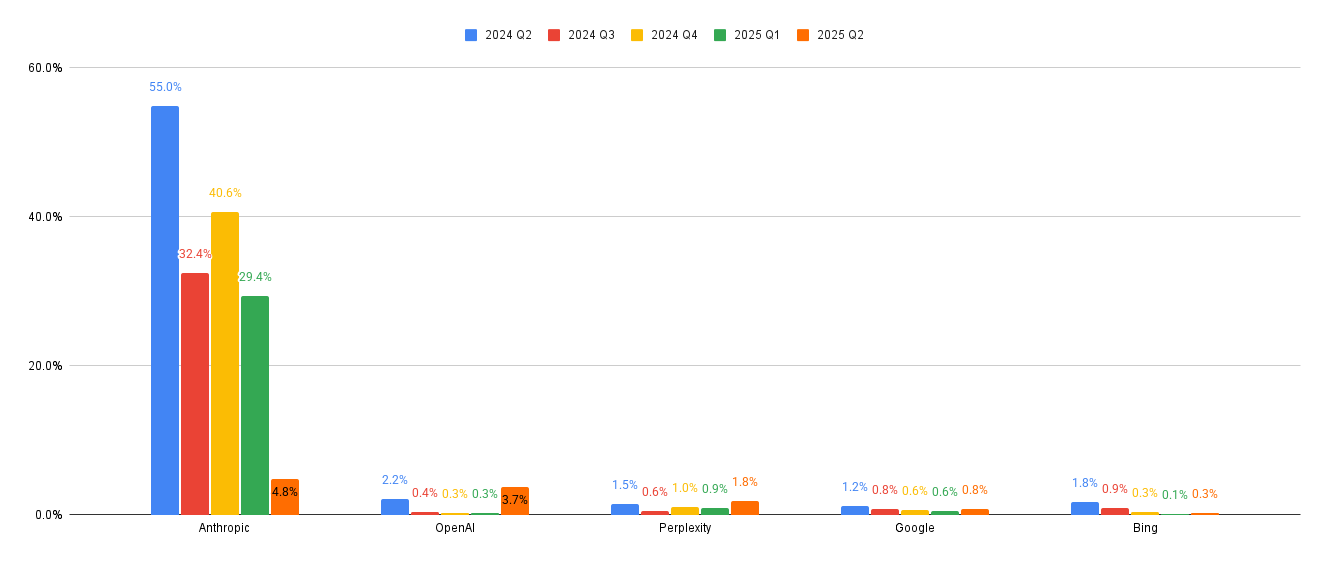

For human readers, a 404 error usually happens when a link is broken or a page has been deleted. In the image below are HTTP 404 rates for Google and Bing. These serve as benchmarks for acceptable 404 error rates since search engines have to be good at indexing content.

So how do the AI platforms where people increasingly get their answers from compare?

Referral error rates from AI platforms

When it comes to AI bots, a 404 tells a different story and can be the result of a hallucination or stale information. This means that an AI application cited a URL that does not exist or was made up, and then a user of that application clicked the link and ended up at a dead end, resulting in this 404 error. This is a bad experience for end users, who are attempting to click through from the AI chat interface only to find that the pages they’re shown don’t actually exist.

TollBit Analytics has access to tens of billions of interactions between the large AI search platforms and users

Across billions of interactions analyzed through TollBit, these are trends that have emerged for :

- Perplexity: Among all of the AI platforms we analyzed, Perplexity has the best benchmark rates on average with a 1.16% 404 rate. This means that users who click on links within Perplexity responses are more likely to land on real, live pages than those who click on a link in other search platforms. Perplexity's model emphasizes explicit citations and live sources, anchoring responses to current web resources rather than static training material.

- Anthropic: Anthropic had a 55% 404 rate in Q2 2024. To put that in perspective, imagine if more than half of all the links you clicked led to an error page. This year in Q2 it had improved to a 4.8% 404 rate. The timing of this improvement corresponds with Claude, Anthropic’s chatbot , being given access to the real-time web, allowing it to retrieve and cite live URLs rather than relying on extrapolations from training data.

- OpenAI: OpenAI, meanwhile, showed the opposite trajectory. Its 404 rate climbed from 0.3% in Q1 2025 to 3.7% in Q2, signaling that users are increasingly being directed to non-existent pages. While OpenAI’s average 404 rate of 1.38% remains lower than Anthropic’s, it trails Perplexity slightly in link accuracy. This rise may reflect a heavier reliance on older or unverified sources.

Why publishers should care

The rise in 404s shows more than technical friction; they reflect the growing impact of defenses publishers are putting up on their sites.

As more sites limit unauthorized access, through tools like TollBit Bot Paywall or in collaboration with more advanced cybersecurity tools via one of our partners (Datadome, HUMAN, Akamai and Fastly), AI systems that rely on outdated or incomplete data are starting to send users to missing or imagined pages. When these systems can’t retrieve live content, the ripple effects are immediate: broken links, missing citations, and less accurate outputs.

Each of those errors chips away at user trust and also represents a missed opportunity for publishers. Even if referral traffic from AI remains small today, every dead link means one less chance for a real visitor to reach your site, engage with your work, or discover your brand directly.

That’s why this moment creates leverage for content owners. Publishers now have the opportunity to define the conditions under which their content is accessed, cited, and valued.

That is why we are building a framework where AI and websites can coexist in ways that are mutually beneficial. We have built the infrastructure that enables AI to engage with publisher content responsibly so that content access is sanctioned and fairly compensated.

The Takeaway

Rising 404 error rates are a sign of a further transitioning web. AI systems aren’t reliable without high-quality, publisher-backed content, and the pathways to that content are changing fast.

By putting the right infrastructure in place now, publishers can help define how this next phase of content discovery, accuracy, and value exchange takes shape.