October 24, 2025

How the OpenAI Apps SDK Works

From OpenAI’s perspective, the Apps SDK is the first step towards the ChatGPT App Store. In the same way the App Store and IOS apps unlocked the iPhone’s ecosystem, this SDK establishes a standardized way for developers to build interactive experiences that run directly inside ChatGPT and reach its hundreds of millions of users.

What the SDK actually does

At a high level, the Apps SDK defines a structure for building an MCP (Model Context Protocol) server that can also return UI widgets — the interactive components users see inside ChatGPT. These widgets are synchronized with the conversation at all times, allowing users to view and interact with live data without ever leaving the chat.

Think of it as a compact, conversational frontend and backend working together:

-

The MCP server acts as the backend, handling data and tool calls.

-

The UI widgets serve as the frontend, rendering live, interactive visuals in the chat.

-

ChatGPT.com itself serves as the client that ties the experience together.

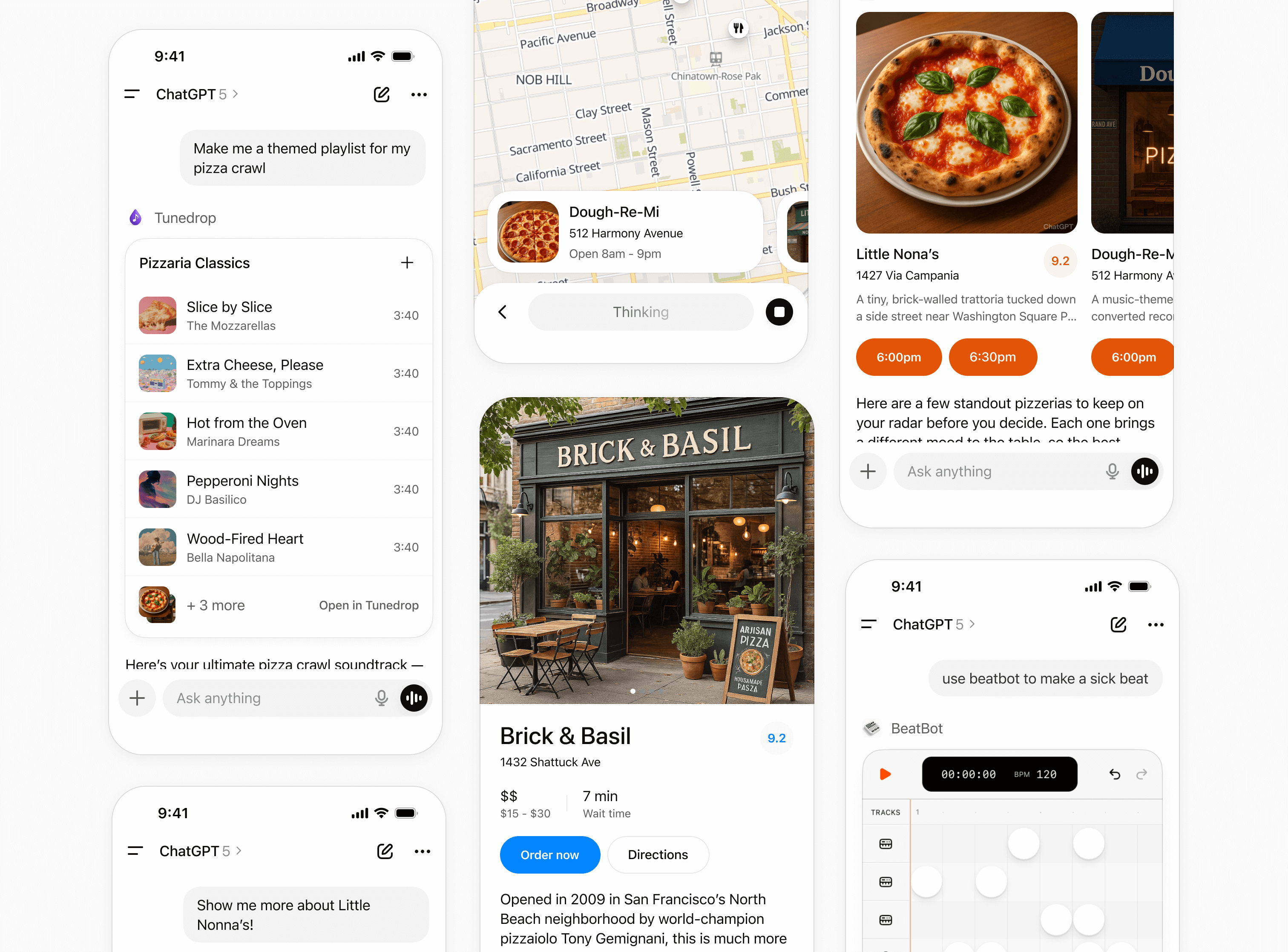

Screenshot from OpenAI

Screenshot from OpenAI

These are all examples of what these UI components look like when rendered in ChatGPT.

How users interact with apps

ChatGPT apps can be triggered in a couple of ways. A user might explicitly add one to a conversation (like typing “@zillow”), or simply ask a question it can answer (for example, “housing prices from zillow”). Either way, ChatGPT matches the query to the right app behind the scenes.

OpenAI has been somewhat ambiguous about how apps will surface when users don’t reference them directly (for instance, “housing prices in Austin”), but they’ve suggested that tool descriptions and metadata will drive this discovery behind the scenes. Over time, this will likely evolve into a new kind of AI search and recommendation layer.

How to think about building a ChatGPT App

For non-technical teams, OpenAI’s Apps SDK might sound like something only engineers can touch — but at a high level, the process mirrors how you’d plan any new digital product.

Here’s how OpenAI recommends thinking about it:

-

Identify the use case.

Start with a clear goal: what problem does your app solve for users inside ChatGPT? For a publisher, that could mean surfacing live data, answering domain-specific questions, or providing curated insights from your content. -

Break it into tools.

Each tool is a specific action or capability your app can perform — like “get latest headlines,” “fetch stock data,” or “summarize article.” These tools are defined on your MCP server. -

Build the UI components.

Design widgets that display results in a conversational, easy-to-scan format. The Apps SDK lets you return lightweight UI elements — like lists, cards, or charts — that stay synced with the chat. -

Wire it all together.

Connect your tools to the UI so that when a user asks a question, your app fetches data and renders the right widget in response. This is where thewindow.openaibridge comes in, linking your app’s interface to the chat session. -

Deploy and test inside ChatGPT.

Once deployed, your app can be added directly into chats or surfaced automatically when it’s relevant to a query. From there, you can refine metadata and tool descriptions to improve discoverability.

For product and content teams, this workflow shows that building an app for ChatGPT isn’t just about code — it’s about designing an experience around intent. The SDK abstracts most of the complexity, so the focus shifts to defining what value your app provides and how users engage with it.

How it works behind the scenes

Underneath the hood, three components make every ChatGPT App function:

-

ChatGPT.com (the client) — the main interface where users interact.

-

The MCP server (the backend) — defines the tools, resources, and logic.

-

UI widgets (the frontend) — self-contained HTML/JavaScript components that display data or anything else you want to share via an app.

Here’s how the workflow unfolds:

-

A user enters a query in ChatGPT.

-

The model determines which tool (from an MCP server) to call.

-

That server responds with structured data and a UI component to display it.

-

The widget (an HTML fragment rendered in an iframe — think of it as a mini web page running inside ChatGPT) displays the result, such as a list, graph, or visualization, and remains interactive.

As an example, a reader asks, “What were the biggest stories this week in finance?” The model triggers a publisher’s MCP tool that fetches story summaries and embeds an interactive widget. This widget can include article excerpts, market data, and links to related analysis—all rendered within the chat window.

From there, users can either continue chatting with the model (triggering new tool calls) or interact directly with the widget (for example, refreshing results or expanding a view).

Why this matters

For more information about why this matters to publishers and site owners, check out our previous blog - What OpenAI’s App SDK means for publishers.

For developers, the Apps SDK represents the start of a unified framework for building interactive, LLM-powered experiences. For publishers, it’s another signal that content distribution is being rebuilt around conversational interfaces.

These apps won’t just deliver static results — they’ll become interactive surfaces where users can engage with data, summaries, or even dynamic dashboards, all powered by structured content and standardized protocols like MCP.

What’s still to come

While OpenAI’s documentation outlines how to build and deploy apps, several key details remain under wraps. Questions around monetization and app discovery haven’t yet been fully defined.

It’s also unclear how OpenAI plans to handle app store distribution, ranking, or revenue sharing — or how content providers will be credited and compensated when their material powers ChatGPT responses.

As OpenAI expands its ecosystem, these factors will determine how viable and valuable ChatGPT Apps become for publishers, developers, and brands alike.

At TollBit, we’ll continue tracking these updates closely and share more details as OpenAI releases additional guidance — especially around monetization models, data attribution, and how AI systems will access and license content through standards like MCP.